The Global CTO of a Top-10 accounting firm said this to me last week.

“The gimmick of ‘co-pilot’ is coming unstuck. You have solved the emerging problem.”

So what is the emerging problem?

Being able to leverage the best attributes of Generative AI to generate outcomes which are not just accurate and hallucination free but 100% explainable.

While everyone is focused on extracting value from Generative AI, most are battling with the issues of accuracy, explainability and trust.

There is massive value to be had if we can leverage Generative AI in ways that:

- can handle detailed context

- deliver accurate answers

- are grounded by your own knowledge

- are 100% explainable

Generative AI alone cannot deliver this because Large Language Models (LLMs) cannot evaluate problems logically and in context, nor produce answers that come with a logical chain of reasoning.

Check out this clip from Yann Lecun, one of the “Godfathers” of AI and head of AI at Meta. Yann points out that regardless of whether you ask a LLM a simple question, a complex question or even an impossible question — the amount of computing power expended to create each block of generated content — or token — is the same.

This is not the way real world reasoning works. When humans are presented with complex problems we apply more effort to reasoning over that complexity.

With LLMs alone, the information generated may look convincing but could also be completely false and demand that the user then spends lots of time checking the veracity of the output.

When Air Canada’s chatbot gave incorrect information to a traveller, the airline argued its chatbot was “responsible for its own actions” but quickly lost its case, making it clear that organisations cannot hide behind AI-powered chatbots that make mistakes.

What’s going wrong is clear.

Generative AI is a machine learning technology that creates compelling predictions, but is not capable of human-like reasoning and cannot provide logical explanations for its generated outputs.

To act on a prediction we need to incorporate judgement.

And while many remain dazzled by the bright lights of Generative AI, those who are working with it in anger are understanding that it is best leveraged as one piece of a bigger architecture.

One architecture that has gained momentum is that of Retrieval Augmented Generation (RAG). It uses well understood mathematical techniques to compare the question being asked with similar looking snippets from a reference source of documents, and then injects those snippets of text into an LLM to help inform an output.

Although RAG still risks hallucinations and lacks explainability, it has the advantage of being able to make content predictions over targeted document sources and can point at the parts of the document that it used when generating its predicted outputs.

However, RAG is inherently limited by its exploratory nature, which focuses on accumulating knowledge or facts that are then summarised, without a deep understanding of the context or any ability to provide logical reasoning.

You can see a video example of RAR in action here.

Other architectures are being explored to try and further improve performance of LLMs including Retrieval Augmented Thought (RAT) to pull in external data, Semantic Rails to try and keep LLMs on topic and Prompt Chaining to turn LLM outputs into new LLM inputs.

All of these are designed to diminish these risks, but by their nature cannot eliminate them.

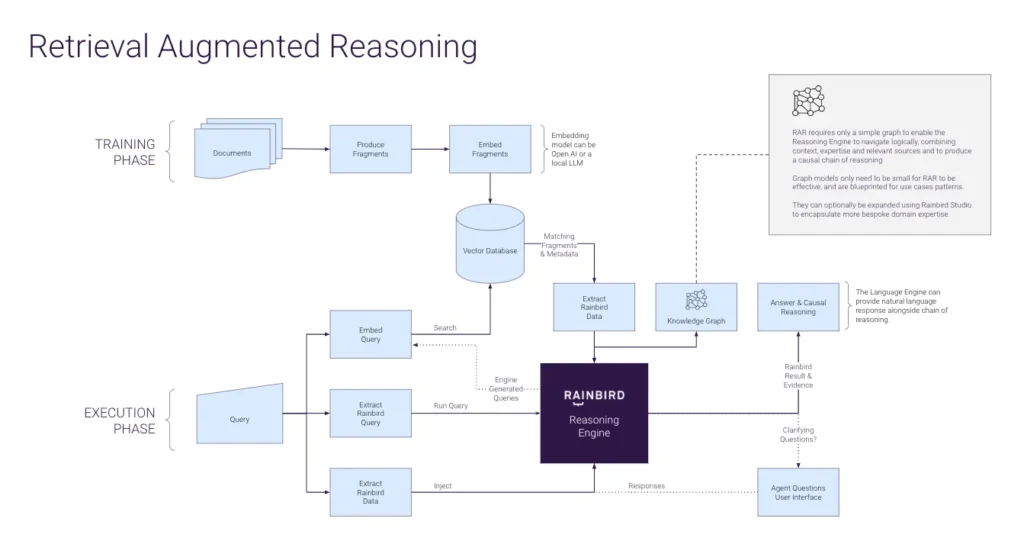

But a new architecture is taking hold pioneered by Rainbird, that of Retrieval Augmented Reasoning (RAR), an innovative approach that transcends the limitations of RAG by integrating a more sophisticated method of interaction with information sources.

Unlike RAG, RAR doesn’t just seek to inform a decision by generating text. Instead it actively and logically reasons like a human would, engaging in an interactive dialogue with the logic contained in the document sources themselves, as well as with human users.

This approach enables the AI to gather context, and then employ logical reasoning to produce answers that are accompanied by a logical rationale — something LLMs alone cannot do.

The RAR architecture requires a powerful symbolic reasoning engine to work, something Rainbird has developed and been using in symbolic AI projects for over a decade. With RAR, that reasoning engine uses a very high level knowledge graph to ground and drive the reasoning process over the document sources.

The distinction between RAG and RAR is not merely a technical one. It fundamentally changes how AI systems can be applied to solve real-world problems. You can RAR models much more nuanced questions and get results that are comprehensive, accurate and represent the ultimate in guard-railing. They cannot hallucinate.

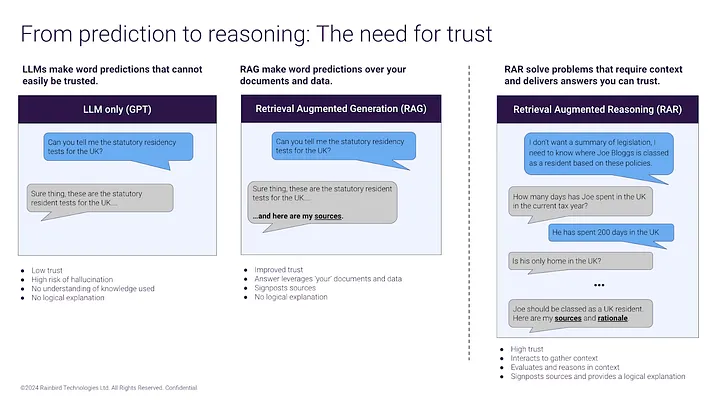

Let’s look at how these methods compare:

- LLMs alone produce answers based on their training from the public internet. Risks of hallucination are high and the lack of explainability is a serious problem.

- RAG’s approach, while useful for exploratory queries, falls short when faced with the need to understand the nuances of specific situations or to provide answers that are not only accurate but also logically sound and fully explainable. While it can point to sources for its predictions, it can still hallucinate and cannot explain its reasoning.

- RAR however addresses all these challenges head-on by enabling a more interactive and iterative process of knowledge retrieval, consultation, and causal reasoning.

For example, RAR can:

- Enable lawyers to interact with legislation and complex case law in the context of their case, and obtain a causal chain of reasoning for worked answers.

- Power tax solutions, reasoning over large amounts of regulation to find appropriate tax treatments at a transaction level.

- Take natural language medical notes and accurately apply medical codes.

These use cases all represent the ability to reason in a way that is contextually relevant, free from hallucination, and backed by clear chains of reasoning.

The RAR approach is sector agnostic and enables the rapid creation of digital assistants in any domain.

But there is yet another benefit of the RAR architecture.

Because it uses a symbolic reasoning engine and a knowledge graph to navigate document sources, the RAR model can be extended to incorporate any amount of human expertise by adding that knowledge to the graph itself, complete with weights and certainties.

This enables RAR models to leverage both documented regulation, policy or operating procedure and human tribal knowledge, enhancing contextual decision-making capabilities to new heights.

So where does this matter most?

RAR is particularly valuable in regulated markets, where the ability to create evidence-based rationales is crucial to trust and therefore to adoption.

By providing answers that come with source references and logical explanations of how those answers were achieved, RAR fosters a level of trust and transparency that is essential in today’s increasingly regulated world.

RAG remains a valuable tool in the AI toolkit, but the advent of RAR represents a significant leap forward in the ability to harness the power of AI for complex decision-making.

By offering nuanced answers that are grounded in logical reasoning and contextual understanding, RAR opens up new possibilities for AI applications that require a high degree of trust and explainability.

As we continue to navigate the challenges and opportunities presented by AI, approaches like RAR will be instrumental in ensuring that our technology not only answers our questions but does so in a way that we can understand and trust.